Confidence Interval Calculator

Use this confidence interval calculator to easily calculate the confidence bounds for a one-sample statistic or for differences between two proportions or means (two independent samples). One-sided and two-sided intervals are supported, as well as confidence intervals for relative difference (percent difference). The calculator will also output P-value and Z-score if "difference between two groups" is selected.

- Using the confidence interval calculator

- What is a confidence interval and "confidence level"

- Confidence interval formula

- How to interpret a confidence interval

- Common misinterpretations of confidence intervals

- One-sided vs. two-sided intervals

- Confidence intervals for relative difference

Using the confidence interval calculator

This confidence interval calculator allows you to perform a post-hoc statistical evaluation of a set of data when the outcome of interest is the absolute difference of two proportions (binomial data, e.g. conversion rate or event rate) or the absolute difference of two means (continuous data, e.g. height, weight, speed, time, revenue, etc.), or the relative difference between two proportions or two means. You can also calculate a confidence interval for the average of just a single group. It uses the Z-distribution (normal distribution). You can select any level of significance you require.

If you are interested in a CI from a single group, then to calculate the confidence interval you need to know the sample size, sample standard deviation and the sample arithmetic average.

If entering data for a CI for difference in proportions, provide the calculator the sample sizes of the two groups as well as the number or rate of events. You can enter that as a proportion (e.g. 0.10), percentage (e.g. 10%) or just the raw number of events (e.g. 50).

If entering means data, make sure the tool is in "raw data" mode and simply copy/paste or type in the raw data, each observation separated by comma, space, new line or tab. Copy-pasting from a Google or Excel spreadsheet works fine.

The confidence interval calculator will output: two-sided confidence interval, left-sided and right-sided confidence interval, as well as the mean or difference ± the standard error of the mean (SEM). It works for comparing independent samples, or for assessing if a sample belongs to a known population. For means data the calculator will also output the sample sizes, means, and pooled standard error of the mean. The Z-score (z statistic) and the p-value for the one-sided hypothesis (one-tailed test) will also be printed when calculating a confidence interval for the difference between proportions or means, allowing you to infer the direction of the effect.

By default a 95% confidence interval is calculated, but the confidence level can be changed to match the required level of uncertainty.

Warning: You must have fixed the sample size / stopping time of your experiment in advance. Doing otherwise means being guilty of optional stopping (fishing for significance) which will result in intervals that have narrower coverage than the nominal. Also, you should not use this confidence interval calculator for comparisons of more than two means or proportions, or for comparisons of two groups based on more than one metric. If your experiment involves more than one treatment group or has more than one outcome variable you need a more advanced calculator which corrects for multiple comparisons and multiple testing. This statistical calculator might help.

What is a confidence interval and "confidence level"

A confidence interval is defined by an upper and lower boundary (limit) for the value of a variable of interest and it aims to aid in assessing the uncertainty associated with a measurement, usually in experimental context, but also in observational studies. The wider an interval is, the more uncertainty there is in the estimate. Every confidence interval is constructed based on a particular required confidence level, e.g. 0.09, 0.95, 0.99 (90%, 95%, 99%) which is also the coverage probability of the interval. A 95% confidence interval (CI), for example, will contain the true value of interest 95% of the time (in 95 out of 5 similar experiments).

Simple two-sided confidence intervals are symmetrical around the observed mean. This confidence interval calculator is expected to produce only such results. In certain scenarios where more complex models are deployed such as in sequential monitoring, asymmetrical intervals may be produced. In any particular case the true value may lie anywhere within the interval, or it might not be contained within it, no matter how high the confidence level is. Raising the confidence level widens the interval, while decreasing it makes it narrower. Similarly, larger sample sizes result in narrower confidence intervals, since the interval's asymptotic behavior is to be reduced to a single point.

Confidence interval formula

The mathematics of calculating a confindence interval are not that difficult. The generic formula used in any CI calculator is the observed statistic (mean, proportion, or otherwise) plus or minus the margin of error, expressed as standard error (SE). It is the basis of any confidence interval calculation:

CIbounds = X ± SE

In answering specific questions different variations apply. The formula when calculating a one-sample confidence interval is:

where n is the number of observations in the sample, X (read "X bar") is the arithmetic mean of the sample and σ is the sample standard deviation (&sigma2 is the variance).

The formula for two-sample confidence interval for the difference of means or proportions is:

where μ1 is the mean of the baseline or control group, μ2 is the mean of the treatment group, n1 is the sample size of the baseline or control group, n2 is the sample size of the treatment group, and σp is the pooled standard deviation of the two samples. The entire expression to the right of ± is the sample estimate of the standard error of the mean (SEM) (unless the entire population has been measured, in which case there is no sampling involved in the calculation).

In both confidence interval formulas Z is the score statistic, corresponding to the desired confidence level. The Z-score corresponding to a two-sided interval at level α (e.g. 0.90) is calculated for Z1-α/2, revealing that a two-sided interval, similarly to a two-sided p-value, is calculated by conjoining two one-sided intervals with half the error rate. E.g. a Z-score of 1.6448 is used for a 0.95 (95%) one-sided confidence interval and a 90% two-sided interval, while 1.956 is used for a 0.975 (97.5%) one-sided confidence interval and a 0.95 (95%) two-sided interval.

Therefore it is important to use the right kind of interval: more on one-tailed vs. two-tailed intervals. Our confidence interval calculator will output both one-sided bounds, but it is up to the user to choose the correct one, based on the inference or estimation task at hand. The adequate interval is determined by the question you are looking to answer.

Common critical values Z

Below is a table with common critical values used for constructing two-sided confidence intervals for statistics with normally-distributed errors.

| Two-sided Confidence level | Critical value (Z) |

|---|---|

| 80% | 1.2816 |

| 90% | 1.6449 |

| 95% | 1.9600 |

| 97.5% | 2.0537 |

| 98% | 2.3263 |

| 99% | 3.0902 |

| 99.9% | 3.2905 |

For one-sided intervals, use a value for 2x the error. E.g. for a 95% one-sided interval use the critical value for a 90% two-sided interval above: 1.6449.

How to interpret a confidence interval

Confidence intervals are useful in visualizing the full range of effect sizes compatible with the data. Basically, any value outside of the interval is rejected: a null with that value would be rejected by a NHST with a significance threshold equal to the interval confidence level (the p-value statistic will be in the rejection region). Conversely, any value inside the interval cannot be rejected, thus when the null hypothesis of interest is covered by the interval it cannot be rejected. The latter, of course, assumes that there is a way to calculate exact interval bounds - many types of confidence intervals achieve their nominal coverage only approximately, that is their coverage is not guaranteed, but approximate. This is especially true in complicated scenarios, not covered in this confidence interval calculator.

The above essentially means that the values outside the interval are the ones we can make inferences about. For the values within the interval we can only say that they cannot be rejected given the data at hand. When assessing the effect sizes that would be refuted by the data, you can construct as many confidence intervals at different confidence levels from the same set of data as you want - this is not a multiple testing issue. A better approach is to calculate the severity criterion of the null of interest, which will also allow you to make decisions about accepting the null.

What then, if our null hypothesis of interest is completely outside the observed confidence interval? What inference can we make from seeing a calculation result which was quite improbable if the null was true?

Logically, we can infer one of three things:

- There is a true effect from the tested treatment or intervention.

- There is no true effect, but we happened to observe a rare outcome.

- The statistical model for computing the confidence interval is invalid (does not reflect reality).

Obviously, one can't simply jump to conclusion 1.) and claim it with one hundred percent confidence. This would go against the whole idea of the confidence interval. Instead, with can say that with confidence 95% (or other level chosen) we can reject the null hypothesis. In order to use the confidence interval as a part of a decision process you need to consider external factors, which are a part of the experimental design process, which includes deciding on the confidence level, sample size and power (power analysis), and the expected effect size, among other things.

Common misinterpretations of confidence intervals

While presenting confidence intervals tend to lead to fewer misinterpretations than p-values, they are still ripe for misuse or bad interpretations. Here are some of the most popular ones, according to Greenland at al. [1].

Probability statements about specific intervals

Strictly speaking, an interval computed using any CI calculator either contains or does not contain the true value. Therefore, strictly speaking, it would be incorrect to state about a particular 99% (or any other level) confidence interval that it has 99% probability that it contains the true effect or true value. What you can say is that procedure used to construct the intervals will produce intervals, containing the true value 99% of the time.

The reverse statement would be that there is just 1% probability that the true value is outside of the interval. This is incorrect, as it is assigning probability to a hypothesis, instead of the testing procedure. What you can say is that, if any null hypothesis not covered by the interval is true, it will fall outside of such an interval only 1% of the time. Results from this confidence interval calculator should under no circumstances be interpreted as degrees of belief.

A 95% confidence interval predicts where 95% of estimates from future studies will fall

While inexperienced research workers make this mistake, a confidence interval makes no such predictions. Usually the probability with which outcomes from future experiments fall within any specific interval is significantly lower than the interval's confidence level.

An interval containing the null is less precise than one excluding it

How precise an interval is does not depend on whether or not it contains the null, or not. The precision of a confidence interval is determined by its width: the less wide the interval, the more accurate the estimate drawn from the data.

One-sided vs. two-sided intervals

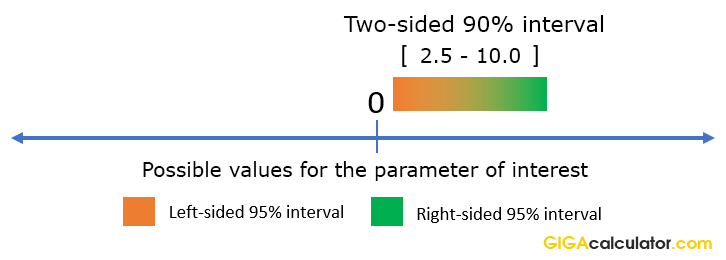

While presently confidence intervals are customarily given by most researchers in their two-sided form, this can often be misleading. Such is the case where scientists are interested if a particular value below or above the interval can be excluded at a given significance level. A one-sided interval in which one side is plus or minus infinity is appropriate when we have a null / want to make statements about a value lying either above or below the top / bottom limit. By design a two-sided confidence interval is constructed as the overlap between two one-sided intervals at 1/2 the error rate 2.

For example, if the calculator produced the two-sided 90% interval (2.5, 10), we can actually say that values less than 2.5 are excluded with 95% confidence precisely because a 90% two-sided interval is nothing more than two conjoined 95% one-sided intervals:

Therefore, to make directional statements based on two-sided intervals, one needs to increase the significance level for the statement. In such cases it is better to use the appropriate one-sided interval instead, to avoid confusion.

Confidence intervals for relative difference

When comparing two independent groups and the variable of interest is the relative (a.k.a. relative change, relative difference, percent change, percentage difference), as opposed to the absolute difference between the two means or proportions, different confidence intervals need to be constructed. This is due to the fact that in calculating relative difference we are doing an additional division by a random variable: the conversion rate of the control during the experiment, which adds more variance to the estimation.

In simulations performed [3] using the formulas operating in this confidence interval calculator, the difference a naive extrapolation of a confidence interval with 95% coverage for absolute difference had coverage for the relative difference between 90% and 94.8% depending on the size of the true difference, meaning that it had anywhere from a couple of percentage points to over 2 times worse coverage than the one for absolute difference. At the same time a properly constructed 95% confidence interval for relative difference had coverage of about 95%.

The formula for a confidence interval around the relative difference (percent effect) is [4]:

where RelDiff is calculated as (μ2 / μ1 - 1), CV1 is the coefficient of variation for the control and CV2 is the coefficient of variation for the treatment group, while Z is the critical value expressed as standardized score. Selecting "relative difference" in the calculator interface switches it to using the above formula.

References

1 Greenland at al. (2016) "Statistical tests, P values, confidence intervals, and power: a guide to misinterpretations", European Journal of Epidemiology 31:337–350

2 Georgiev G.Z. (2017) "One-tailed vs Two-tailed Tests of Significance in A/B Testing", [online] https://blog.analytics-toolkit.com/2017/one-tailed-two-tailed-tests-significance-ab-testing/ (accessed Apr 28, 2018)

3 Georgiev G.Z. (2018) "Confidence Intervals & P-values for Percent Change / Relative Difference", [online] https://blog.analytics-toolkit.com/2018/confidence-intervals-p-values-percent-change-relative-difference/ (accessed Jun 15, 2018)

4 Kohavi et al. (2009) "Controlled experiments on the web: survey and practical guide", Data Mining and Knowledge Discovery 18:151

Cite this calculator & page

If you'd like to cite this online calculator resource and information as provided on the page, you can use the following citation:

Georgiev G.Z., "Confidence Interval Calculator", [online] Available at: https://www.gigacalculator.com/calculators/confidence-interval-calculator.php URL [Accessed Date: 24 Feb, 2026].

Our statistical calculators have been featured in scientific papers and articles published in high-profile science journals by:

Georgi Georgiev is an applied statistician with background in statistical analysis of online controlled experiments, including developing statistical software, writing over one hundred articles and papers, as well as the popular book "Statistical Methods in Online A/B Testing".

Georgi Georgiev is an applied statistician with background in statistical analysis of online controlled experiments, including developing statistical software, writing over one hundred articles and papers, as well as the popular book "Statistical Methods in Online A/B Testing".