P-value Calculator

Statistical significance calculator to easily calculate the p-value and determine whether the difference between two proportions or means (independent groups) is statistically significant. T-test calculator & z-test calculator to compute the Z-score or T-score for inference about absolute or relative difference (percentage change, percent effect). Suitable for analysis of simple A/B tests.

- Using the p-value calculator

- What is "p-value" and "significance level"

- P-value formula

- Why do we need a p-value?

- How to interpret a statistically significant result / low p-value

- P-value and significance for relative difference in means or proportions

Using the p-value calculator

This statistical significance calculator allows you to perform a post-hoc statistical evaluation of a set of data when the outcome of interest is difference of two proportions (binomial data, e.g. conversion rate or event rate) or difference of two means (continuous data, e.g. height, weight, speed, time, revenue, etc.). You can use a Z-test (recommended) or a T-test to find the observed significance level (p-value statistic). The Student's T-test is recommended mostly for very small sample sizes, e.g. n < 30. In order to avoid type I error inflation which might occur with unequal variances the calculator automatically applies the Welch's T-test instead of Student's T-test if the sample sizes differ significantly or if one of them is less than 30 and the sampling ratio is different than one.

If entering proportions data, you need to know the sample sizes of the two groups as well as the number or rate of events. These can be entered as proportions (e.g. 0.10), percentages (e.g. 10%) or just raw numbers of events (e.g. 50).

If entering means data, simply copy/paste or type in the raw data, each observation separated by comma, space, new line or tab. Copy-pasting from a Google or Excel spreadsheet works fine.

The p-value calculator will output: p-value, significance level, T-score or Z-score (depending on the choice of statistical hypothesis test), degrees of freedom, and the observed difference. For means data it will also output the sample sizes, means, and pooled standard error of the mean. The p-value is for a one-sided hypothesis (one-tailed test), allowing you to infer the direction of the effect (more on one vs. two-tailed tests). However, the probability value for the two-sided hypothesis (two-tailed p-value) is also calculated and displayed, although it should see little to no practical applications.

Warning: You must have fixed the sample size / stopping time of your experiment in advance, otherwise you will be guilty of optional stopping (fishing for significance) which will inflate the type I error of the test rendering the statistical significance level unusable. Also, you should not use this significance calculator for comparisons of more than two means or proportions, or for comparisons of two groups based on more than one metric. If a test involves more than one treatment group or more than one outcome variable you need a more advanced tool which corrects for multiple comparisons and multiple testing. This statistical calculator might help.

What is "p-value" and "significance level"

The p-value is a heavily used test statistic that quantifies the uncertainty of a given measurement, usually as a part of an experiment, medical trial, as well as in observational studies. By definition, it is inseparable from inference through a Null-Hypothesis Statistical Test (NHST). In it we pose a null hypothesis reflecting the currently established theory or a model of the world we don't want to dismiss without solid evidence (the tested hypothesis), and an alternative hypothesis: an alternative model of the world. For example, the statistical null hypothesis could be that exposure to ultraviolet light for prolonged periods of time has positive or neutral effects regarding developing skin cancer, while the alternative hypothesis can be that it has a negative effect on development of skin cancer.

In this framework a p-value is defined as the probability of observing the result which was observed, or a more extreme one, assuming the null hypothesis is true. In notation this is expressed as:

p(x0) = Pr(d(X) > d(x0); H0)

where x0 is the observed data (x1,x2...xn), d is a special function (statistic, e.g. calculating a Z-score), X is a random sample (X1,X2...Xn) from the sampling distribution of the null hypothesis. This equation is used in this p-value calculator and can be visualized as such:

Therefore the p-value expresses the probability of committing a type I error: rejecting the null hypothesis if it is in fact true. See below for a full proper interpretation of the p-value statistic.

Another way to think of the p-value is as a more user-friendly expression of how many standard deviations away from the normal a given observation is. For example, in a one-tailed test of significance for a normally-distributed variable like the difference of two means, a result which is 1.6448 standard deviations away (1.6448σ) results in a p-value of 0.05.

The term "statistical significance" or "significance level" is often used in conjunction to the p-value, either to say that a result is "statistically significant", which has a specific meaning in statistical inference (see interpretation below), or to refer to the percentage representation the level of significance: (1 - p value), e.g. a p-value of 0.05 is equivalent to significance level of 95% (1 - 0.05 * 100). A significance level can also be expressed as a T-score or Z-score, e.g. a result would be considered significant only if the Z-score is in the critical region above 1.96 (equivalent to a p-value of 0.025).

P-value formula

There are different ways to arrive at a p-value depending on the assumption about the underlying distribution. This tool supports two such distributions: the Student's T-distribution and the normal Z-distribution (Gaussian) resulting in a T test and a Z test, respectively.

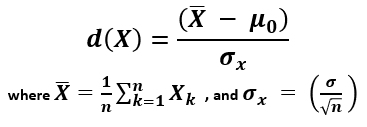

In both cases, to find the p-value start by estimating the variance and standard deviation, then derive the standard error of the mean, after which a standard score is found using the formula [2]:

X (read "X bar") is the arithmetic mean of the population baseline or the control, μ0 is the observed mean / treatment group mean, while σx is the standard error of the mean (SEM, or standard deviation of the error of the mean).

When calculating a p-value using the Z-distribution the formula is Φ(Z) or Φ(-Z) for lower and upper-tailed tests, respectively. Φ is the standard normal cumulative distribution function and a Z-score is computed. In this mode the tool functions as a Z score calculator.

When using the T-distribution the formula is Tn(Z) or Tn(-Z) for lower and upper-tailed tests, respectively. Tn is the cumulative distribution function for a T-distribution with n degrees of freedom and so a T-score is computed. Selecting this mode makes the tool behave as a T test calculator.

The population standard deviation is often unknown and is thus estimated from the samples, usually from the pooled samples variance. Knowing or estimating the standard deviation is a prerequisite for using a significance calculator. Note that differences in means or proportions are normally distributed according to the Central Limit Theorem (CLT) hence a Z-score is the relevant statistic for such a test.

Why do we need a p-value?

If you are in the sciences, it is often a requirement by scientific journals. If you apply in business experiments (e.g. A/B testing) it is reported alongside confidence intervals and other estimates. However, what is the utility of p-values and by extension that of significance levels?

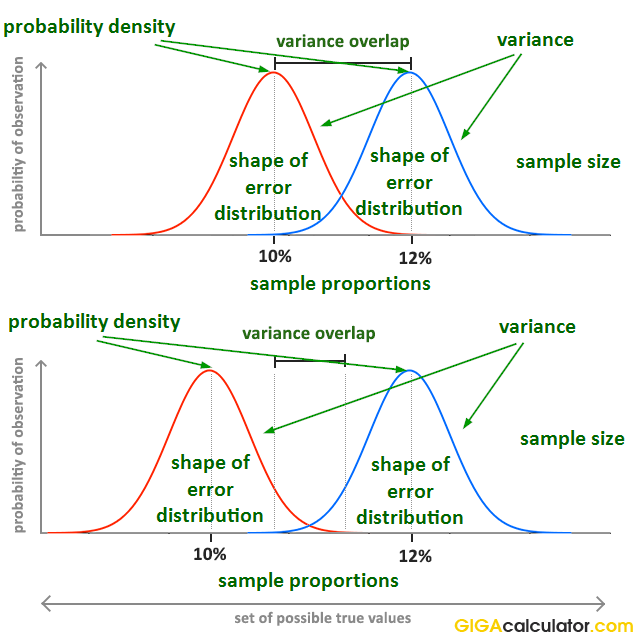

First, let us define the problem the p-value is intended to solve. People need to share information about the evidential strength of data that can be easily understood and easily compared between experiments. The picture below represents, albeit imperfectly, the results of two simple experiments, each ending up with the control with 10% event rate treatment group at 12% event rate.

However, it is obvious that the evidential input of the data is not the same, demonstrating that communicating just the observed proportions or their difference (effect size) is not enough to estimate and communicate the evidential strength of the experiment. In order to fully describe the evidence and associated uncertainty, several statistics need to be communicated, for example, the sample size, sample proportions and the shape of the error distribution. Their interaction is not trivial to understand, so communicating them separately makes it very difficult for one to grasp what information is present in the data. What would you infer if told that the observed proportions are 0.1 and 0.12 (e.g. conversion rate of 10% and 12%), the sample sizes are 10,000 users each, and the error distribution is binomial?

Instead of communicating several statistics, a single statistic was developed that communicates all the necessary information in one piece: the p-value. A p-value was first derived in the late 18-th century by Pierre-Simon Laplace, when he observed data about a million births that showed an excess of boys, compared to girls. Using the calculation of significance he argued that the effect was real but unexplained at the time. We know this now to be true and there are several explanations for the phenomena coming from evolutionary biology. Statistical significance calculations were formally introduced in the early 20-th century by Pearson and popularized by Sir Ronald Fisher in his work, most notably "The Design of Experiments" (1935) [1] in which p-values were featured extensively. In business settings significance levels and p-values see widespread use in process control and various business experiments (such as online A/B tests, i.e. as part of conversion rate optimization, marketing optimization, etc.).

How to interpret a statistically significant result / low p-value

Saying that a result is statistically significant means that the p-value is below the evidential threshold (significance level) decided for the statistical test before it was conducted. For example, if observing something which would only happen 1 out of 20 times if the null hypothesis is true is considered sufficient evidence to reject the null hypothesis, the threshold will be 0.05. In such case, observing a p-value of 0.025 would mean that the result is interpreted as statistically significant.

But what does that really mean? What inference can we make from seeing a result which was quite improbable if the null was true?

Observing any given low p-value can mean one of three things [3]:

- There is a true effect from the tested treatment or intervention.

- There is no true effect, but we happened to observe a rare outcome. The lower the p-value, the rarer (less likely, less probable) the outcome.

- The statistical model is invalid (does not reflect reality).

Obviously, one can't simply jump to conclusion 1.) and claim it with one hundred percent certainty, as this would go against the whole idea of the p-value and statistical significance. In order to use p-values as a part of a decision process external factors part of the experimental design process need to be considered which includes deciding on the significance level (threshold), sample size and power (power analysis), and the expected effect size, among other things. If you are happy going forward with this much (or this little) uncertainty as is indicated by the p-value calculation suggests, then you have some quantifiable guarantees related to the effect and future performance of whatever you are testing, e.g. the efficacy of a vaccine or the conversion rate of an online shopping cart.

Note that it is incorrect to state that a Z-score or a p-value obtained from any statistical significance calculator tells how likely it is that the observation is "due to chance" or conversely - how unlikely it is to observe such an outcome due to "chance alone". P-values are calculated under specified statistical models hence 'chance' can be used only in reference to that specific data generating mechanism and has a technical meaning quite different from the colloquial one. For a deeper take on the p-value meaning and interpretation, including common misinterpretations, see: definition and interpretation of the p-value in statistics.

P-value and significance for relative difference in means or proportions

When comparing two independent groups and the variable of interest is the relative (a.k.a. relative change, relative difference, percent change, percentage difference), as opposed to the absolute difference between the two means or proportions, the standard deviation of the variable is different which compels a different way of calculating p-values [5]. The need for a different statistical test is due to the fact that in calculating relative difference involves performing an additional division by a random variable: the event rate of the control during the experiment which adds more variance to the estimation and the resulting statistical significance is usually higher (the result will be less statistically significant). What this means is that p-values from a statistical hypothesis test for absolute difference in means would nominally meet the significance level, but they will be inadequate given the statistical inference for the hypothesis at hand.

In simulations I performed the difference in p-values was about 50% of nominal: a 0.05 p-value for absolute difference corresponded to probability of about 0.075 of observing the relative difference corresponding to the observed absolute difference. Therefore, if you are using p-values calculated for absolute difference when making an inference about percentage difference, you are likely reporting error rates which are about 50% of the actual, thus significantly overstating the statistical significance of your results and underestimating the uncertainty attached to them.

In short - switching from absolute to relative difference requires a different statistical hypothesis test. With this calculator you can avoid the mistake of using the wrong test simply by indicating the inference you want to make.

References

1 Fisher R.A. (1935) – "The Design of Experiments", Edinburgh: Oliver & Boyd

2 Mayo D.G., Spanos A. (2010) – "Error Statistics", in P. S. Bandyopadhyay & M. R. Forster (Eds.), Philosophy of Statistics, (7, 152–198). Handbook of the Philosophy of Science. The Netherlands: Elsevier.

3 Georgiev G.Z. (2017) "Statistical Significance in A/B Testing – a Complete Guide", [online] https://blog.analytics-toolkit.com/2017/statistical-significance-ab-testing-complete-guide/ (accessed Apr 27, 2018)

4 Mayo D.G., Spanos A. (2006) – "Severe Testing as a Basic Concept in a Neyman–Pearson Philosophy of Induction", British Society for the Philosophy of Science, 57:323-357

5 Georgiev G.Z. (2018) "Confidence Intervals & P-values for Percent Change / Relative Difference", [online] https://blog.analytics-toolkit.com/2018/confidence-intervals-p-values-percent-change-relative-difference/ (accessed May 20, 2018)

Cite this calculator & page

If you'd like to cite this online calculator resource and information as provided on the page, you can use the following citation:

Georgiev G.Z., "P-value Calculator", [online] Available at: https://www.gigacalculator.com/calculators/p-value-significance-calculator.php URL [Accessed Date: 15 Feb, 2026].

Our statistical calculators have been featured in scientific papers and articles published in high-profile science journals by:

Georgi Georgiev is an applied statistician with background in statistical analysis of online controlled experiments, including developing statistical software, writing over one hundred articles and papers, as well as the popular book "Statistical Methods in Online A/B Testing".

Georgi Georgiev is an applied statistician with background in statistical analysis of online controlled experiments, including developing statistical software, writing over one hundred articles and papers, as well as the popular book "Statistical Methods in Online A/B Testing".